ADV

Availability

% Actual blocks

ADV2

Availability

% Actual blocks

ADV3

Availability

% Actual blocks

Availability is the percentage of assigned slots where the pool attempted to forge blocks. This metric is reduced by missing or invalid blocks which are nominally caused by the operator. Actual blocks shows the real world pool performance as the percentage of blocks forged and confirmed on chain compared to the ideal slots. Both metrics are calculated across the previous 20 epochs.

We track a large number of metrics to ensure that the ADAvault pools are reliably forging blocks including:

- leader slots assigned,

- the ideal number of leader slots per epoch, based on pool stake,

- luck, which is the percentage of leader slots to ideal;

- and lastly, the actual blocks forged and confirmed on chain.

Leader slots are assigned to the Cardano node by a Verifiably Random Function (VRF). The VRF will always average over time to 100% luck but there can be significant short term variance, particularly when pools are smaller.

ADV

The graph shows leader slots assigned, ideal allocation based on stake, luck (percentage leader against ideal); and lastly, the actual blocks forged which were confirmed on the blockchain. We show tabular data below for the last 20 epochs.

| Epoch | Lead | Ideal | Luck | Actual | MGSI* |

* M=Missed, G=Ghosted, S=Stolen, I=Invalid (see below for more details).

There were stolen and ghosted blocks in some Epochs where an assigned block was minted by another pool. This is a standard part of the Ouroboros Praos protocol with the decision based on the pool VRF key. See discussion on implementation here https://github.com/input-output-hk/ouroboros-network/issues/2014

ADV2

ADV2 shows a similar overall performance profile as ADV which is expected given the pool has similar stake and runs on an equivalent hardware and software stack. Again, please note the ghosted and stolen blocks which become more likely as a pool gets closer to 100% saturation.

| Epoch | Lead | Ideal | Luck | Actual | MGSI |

ADV3

Slot leader allocation for ADV3 prior to Epoch 400 was more variable as the pool was smaller, average luck was consistent with the larger pools but with greater variance across epochs. There were less stolen and ghosted blocks as the potential for VRF collisions with other pools was proportionately smaller. However ADV3 has now grown to the same size as the other pools and overall performance has converged.

| Epoch | Lead | Ideal | Luck | Actual | MGSI |

Key to headings

Leader: Number of slots scheduled to lead and mint block

Ideal: Expected number of leader slots based on active stake

Luck: The percentage of leader slots assigned vs ideal

Confirm: Number of blocks forged and subsequently validated on-chain

Invalid: Node attempted but failed to forge block (for unknown reasons)

Missed: Scheduled at slot but no record of it in cncli DB and no other pool has made a block for this slot

Ghosted: Block created but marked as orphaned and no other pool has made a valid block for this slot, possibly due to height battle or block propagation issue

Stolen: Another pool has a valid block registered on-chain for the same slot, possibly due to VRF contention and more than one pool assigned to the slot

Historic performance data is refreshed hourly, there will be some lag against the real time statistics for block production in the current epoch.

Observability

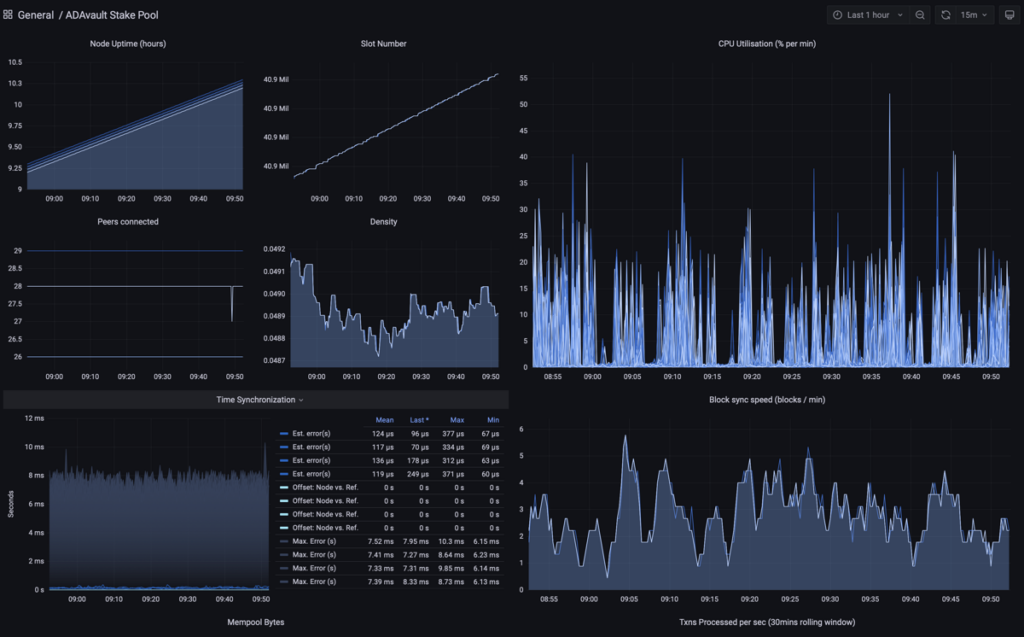

ADAvault uses BetterStack for alerting, plus Prometheus and Grafana to monitor infrastructure and collect data from nodes.

Stake pool operational availability remains near 100% and the Cardano node software has proven to be very stable. We always allow for a comprehensive soak test in PreProd when compiling new releases.

ADAvault has very low latency measurements for connectivity to peers. This is important as it increases the likelihood that the blocks forged by the stake pools are adopted on chain. Real time details are available from PoolTool.io who measure propagation delays across all registered pools.

We regularly review global peer connectivity, and are confident the pools have excellent performance characteristics for reliable block production.